AI Assistance in Unmasking Deceptive Propaganda Efforts

cyberspace miscreants are amplifying their deceitful campaigns with AI-spun yarns, spinning captivating yet false narratives

by Dr. Mark Finlayson, an Assoc. Prof. of Comp. Sci at Florida International University

and Azwad Anjum Islam, a Ph.D. student in Comp. & Info. Sci at the same uni

Hear Me Out

The power of a well-spun yarn isn't often eclipsed by hard facts – instead, it's the familiarity and relatability that win us over every time. Whether it's a heart-wrenching tale, a personal testament, or a viral meme that echoes deep cultural roots, stories stick with us, move us, and shape our beliefs.

But when these tales fall into the wrong hands, they become dangerous – and with the rise of social media platforms, there's been a worrying amplification of these narrative warfare campaigns. After the bust of Russian entities manufacturing election-related misinformation on Facebook ahead of the 2016 election, the need for AI defense against manipulation became glaringly apparent.

Although AI is unfortunately fueling the fire, it's becoming one of our strongest tools in the war against these manipulative narratives. Researchers are leveraging machine learning techniques to poke holes in disinfo content.

At Florida International University's Cognition, Narrative & Culture Lab, we're developing AI tools to sniff out disinformation campaigns that use narrative flair to persuade. We're training AI to dig deeper than surface-level language analysis, uncovering narrative structures, tracking personas and timelines, and sussing out cultural references.

Disinformation vs. Misinformation

In 2024, the Department of Justice dismantled a Kremlin-funded operation using nearly a thousand fake social media accounts to spread fabricated narratives. This wasn't an isolated incident – it was part of a bigger, AI-driven plot.

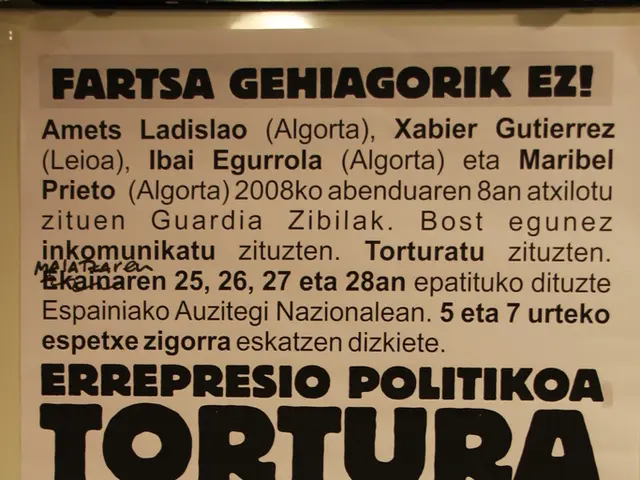

Disinformation is a step above misinformation – whereas the latter simply involves giving false or inaccurate information, disinformation intentionally fabricates and shares stories with the aim of deceiving and manipulating. Take the viral video from October 2024 that claimed to show a Pennsylvania election worker mutilating mail-in ballots for Donald Trump – millions viewed it before the FBI traced it back to a Russian influence unit.

These examples illustrate how foreign influence campaigns can manufacture, fabricate, and disproportionately amplify false narratives to exploit political divisions and shape American opinions.

Humans are wired to absorb the world through storytelling, and narratives don't just help us remember – they help to spark emotional responses and shape our interpretations of complex events. While facts and figures might provide cold, hard data, it's the heart-wrenching story of the stranded turtle and the plastic straw in its nostril that stirs empathy and concern about the environment.

This emotional power is precisely why narratives make such effective tools for persuasion – and why they're so dangerous when wielded by malicious actors. A compelling story can overrule skepticism and shift opinions more effectively than a barrage of facts and figures.

Distinguishing Real from False

Analyzing the usernames, cultural context, and narrative structure of stories can help us identify manipulative narratives that don't add up. Usernames can often signal demographic and identity traits, which irregularities may hint at a manufactured persona.

A handle like @JamesBurnsNYT might suggest an authentic journalist, while a handle like @JimB_NYC could indicate a more casual user. However, a handle alone isn't enough to confirm authenticity; interpreting it within the broader narrative an account presents can help AI systems better evaluate the identity's legitimacy.

Foreign adversaries can craft handles that mimic trustworthy voices or affiliations, attempting to gain our trust and exploiting our natural inclination to trust sources that appear familiar.

While local stories unfold chronologically for humans, AI systems struggle to make sense of nonlinear storytelling – and our lab is working on methods for timeline extraction, helping AI to better understand sequences of events within narratives.

Narratives can also incorporate symbols and objects with different meanings across cultures. Given the language barrier, AI systems risk interpreting cultural nuances incorrectly. By training AI on diverse cultural narratives, we can improve its cultural literacy and better detect disinformation that weaponizes symbolism, sentiments, and storytelling within targeted communities.

Who benefits from narrative-aware AI? Intelligence analysts, crisis response agencies, social media platforms, researchers, educators, and ordinary users alike can strike back against disinformation by leveraging AI technology to make sense of and counter the deceptive narratives it spreads.

Artificial Intelligence (AI) is being employed to combat disinformation campaigns that use storytelling techniques to deceive, as seen in the case of the Kremlin-funded operation using fake social media accounts in 2024. At Florida International University's Cognition, Narrative & Culture Lab, researchers are developing AI tools that can analyze narrative structures, track personas and timelines, and understand cultural references in disinformation, thereby helping to distinguish real from false narratives in education and self-development, particularly in the realm of learning about technology and artificial intelligence.